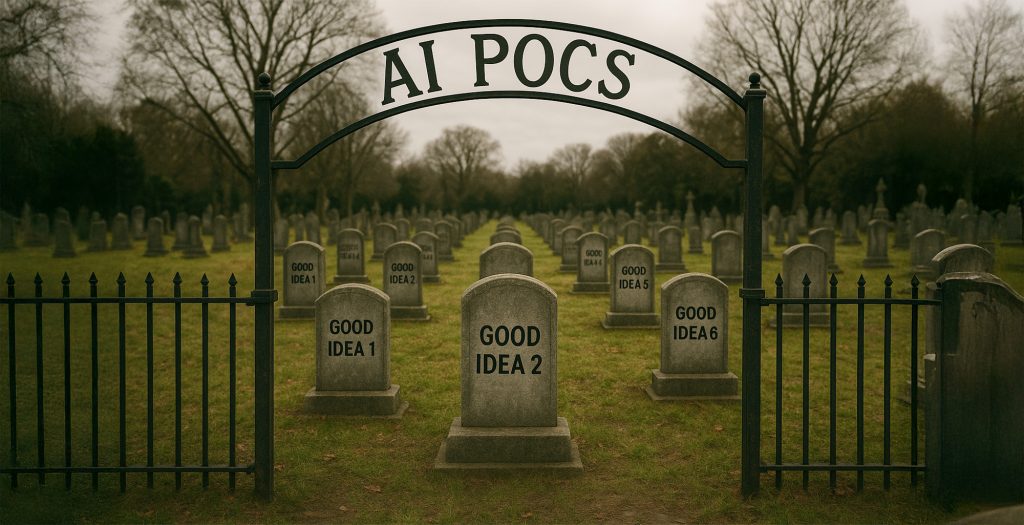

Why AI Proofs of Concept Are the Graveyard of Good Ideas (and How SMBs Can Actually Make AI Valuable)

Owners of small and medium businesses have already felt it: AI demos look magical, but value never quite lands. We’ve been there too. Here’s the blunt truth and a path out of the ProofofConcept graveyard.

The POC Trap: Lots of Demos, Little Delivery

We all love a quick win. Spin up a chatbot, wire up an LLM to your knowledge base, generate a few shiny slides, call it an AI Proof of Concept (POC) and move on. Six months later? Nothing in production, nothing on the P&L, and a sceptical team who now think “AI” means “Another Initiative”.

POCs are supposed to derisk innovation. In practice, most become a holding pen for half-born ideas. There are no metrics, no operational plan, and no trained workforce to actually use the thing. They die quietly in a shared drive or a forgotten Notion page.

We call that backlog what it really is: a graveyard of ideas.

Why does this keep happening?

- The question is wrong. We ask “Can we build this?” instead of “Should we scale this?”

- No owner, no budget, no runway. A POC without a line of business sponsor and a change plan is just theatre.

- Untrained teams. If the people who should benefit aren’t trained to use AI, they won’t adopt it. That’s value leakage, pure and simple.

- Tech-first bias. Vendors sell features, while we need outcomes. Tools are easy. Process change is hard.

- Risk paralysis. Legal and compliance step in too late or too broadly, so everything stalls.

Sound familiar? Let’s reframe the game.

What Science’s “Slowdown” Teaches Us About AI in Business

Economists Ajay K. Agrawal, Avi Goldfarb and others have argued for years that when prediction gets cheap, the bottleneck shifts elsewhere, to judgment, integration and incentives. More recently, Kapoor & Narayanan’s analysis of scientific progress suggests that while output (papers) is accelerating, breakthroughs aren’t. Quantity up, quality flat.

That mirrors what we see in SMB AI projects. The number of experiments is exploding, but the number of production systems that create measurable value isn’t. The signal is getting drowned by noise.

Lesson: Volume of experimentation is not the metric. Velocity to value is.

From Proof of Concept to Proof of Value to Production

We stop the rot by changing the lifecycle:

- Problem → Hypothesis → Metric. Start with a business pain that has a price tag (hours wasted, revenue missed). Write a falsifiable hypothesis: “We believe an AI assistant will reduce quote turnaround time by 40%.”

- Proof of Value (PoV), not POC. In 2-4 weeks, show the thing works and delivers a measurable outcome on real data with real users.

- Operationalise. Figure out who owns it, how it’s monitored, how staff are trained, how it plugs into processes.

- Scale or scrap. If the metric moved, scale. If not, kill it fast and take the learning.

POC asks “Can it be done?”. PoV asks “Is it worth doing?”

Workforce AI Training: The Non-Negotiable Foundation

Put bluntly, without Workforce AI Training, you won’t get adoption. The tech is not the blocker, but behaviour is. Your people need to know:

- How to prompt effectively and safely.

- When to trust, verify, or escalate.

- How to rewrite their own workflows so AI is embedded, not bolted on.

- Where the risk lines are (data privacy, IP leakage, bias, hallucinations).

We bake this into every engagement because we’ve learned the hard way that untrained teams bypass your prototype. Trained teams co-create with it and pull demand from the bottom up.

If you’re looking for Workforce AI Training that actually changes day-to-day behaviour, that’s what our AI Training programme is built to do.

The Value Equation: A Simple Way to Prioritise AI Ideas

When you evaluate an idea, run it through a brutally simple lens:

Value = (Time Saved or Revenue Gained) × Frequency × Adoption Probability ÷ Risk/Complexity

If an idea saves 10 minutes but happens 500 times a week and staff will definitely use it, that’s a goldmine. If it’s sexy but seldom used, park it.

Ask these seven questions before you green-light anything:

- What’s the measurable outcome? Hours, margin, error rate, conversion, NPS?

- Who owns the process today and tomorrow? Name them.

- Which data do we need and is it accessible/clean/legal to use?

- How will frontline staff be trained and supported? (Yes, Workforce AI Training again.)

- How will we evaluate performance? Precision/recall, task success rate, cost per task, etc.

- What’s the risk profile? Regulatory, reputational, operational and how do we mitigate?

- What does “production” mean here? SLA, uptime, monitoring, incident response.

If you can’t answer most of the above in one page, you’re not ready for a POC, let alone production.

Evals Are the New User Stories for AI

Traditional software has test cases. AI needs evaluations (evals). These are ongoing benchmarks that simulate real tasks. They’re your early warning system, not a one-and-done quality assurance.

- Define evals at the task level (e.g., “Can the assistant draft a compliant invoice email within 3 minutes with ≥90% accuracy?”).

- Automate them so you see performance drift before customers do.

- Use evals to arbitrate between models, prompts, and guardrails.

We treat evals as living artefacts, just like user stories in Agile. They keep everyone honest and focused on user value.

A 30-Day Sprint to Real Value (Our Playbook)

Here’s how we typically turn a promising idea into a Proof of Value:

Week 0: Alignment & Scoping

- One workshop with stakeholders to agree on the problem, metric, and constraints.

- Map the process today vs. tomorrow.

Weeks 1–2: Prototype & Train

- Build the narrowest possible solution to hit the metric.

- Run Workforce AI Training sessions so users know how to use, and stress-test, the prototype.

Weeks 3–4: Run the PoV

- Deploy to a small but real user group.

- Collect metrics, feedback, failure modes.

- Hold a go/no-go review. Scale or stop.

Output: Either a signed-off plan to operationalise (budget, owner, roadmap), or a decision to kill with documented learning. Both are wins because both reduce uncertainty.

Augment or Automate? A Quick Decision Matrix

Not every task should be automated. Sometimes the best move is to augment. This means giving humans leverage rather than replacing them.

Ask:

- Is the task high stakes? (Legal filings vs. internal memos)

- Is the output easily verifiable? (Spreadsheet formulas vs. strategic advice)

- Is context fluid or fixed? (Policies change weekly, product stock keeping units don’t)

- What’s the cost of a wrong answer?

We aim for “human in the loop” by default, “human on the loop” for stable, low-risk tasks, and “no human” only when automation is truly safer and cheaper.

Culture Eats Use-Cases for Breakfast

The biggest predictor of AI success inside SMBs isn’t model choice – it’s culture.

- Leaders signal seriousness by budgeting for training, not just tooling.

- Teams feel safe to experiment when failure is logged as learning, not a black mark.

- Ops and compliance are partners, involved from day one, not last-minute blockers.

Get this right and you’ll see AI ideas bubble up from the frontline. Get it wrong and you’ll keep running into resistance.

Common Anti-Patterns We Kill Early

- “We’ll just get an intern to try GPT.” Great for learning, useless for sustainable value.

- “Let’s integrate every tool we use.” Shiny-object syndrome. Focus on one process that matters.

- “Legal will look at it later.” Invite them now. They’ll help you move faster, not slower.

- “We need a custom model.” 99% of SMB use-cases don’t. Spend that budget on training and process change.

Making AI Valuable: A Checklist You Can Steal

Use this as your pre-flight for every AI initiative:

- We can state the business problem in one sentence and put a £/$ figure on it.

- We’ve defined success metrics and an eval framework upfront.

- We know who owns the process and the budget post-PoV.

- Our people have (or will get) Workforce AI Training before rollout.

- We understand the data, security, and compliance implications.

- We’ve planned how this will operate in production (monitoring, updates, support).

- We will decide to scale or scrap within 30 days of starting.

Print it. Stick it on a wall. Hold yourselves to it.

The Payoff: Micro-Wins Compound

When you shift from lab demos to production value, something compounding happens:

- Your staff start to pull for more AI because they see results.

- You build libraries of prompts, evals, and guardrails that make the next project faster.

- You create a culture where experimentation is fast but disciplined.

- Most importantly, your competitors are still stuck in POC-land while you’re quietly automating the boring, scaling the valuable, and freeing people for work that matters.

Ready to Get Out of the Graveyard?

We help SMBs move from AI curiosity to operational value, fast. If you want to:

- Train your teams so they actually use AI safely and effectively,

- Run a focused AI Proof of Value instead of another Proof of Concept,

- Build a roadmap that makes AI valuable quarter after quarter,

…then let’s talk.

👉 Visit our AI Training page and we’ll show you exactly how we turn scepticism into ROI.

We believe small and medium businesses shouldn’t have to cycle through the POC graveyard. Let’s make AI valuable, by design.